仔细看了看ptmalloc的源代码,深入了解了一下linux下堆分配回收的机制和流程

其中也借鉴了不少网上的资料,不过多半也不是很靠谱,所以我花了不少时间仔细阅读了关键代码,结合网上的资料,写下这篇文章,如有疏漏或者理解错误,欢迎指正~

以下的分析及源代码皆基于glibc2.27

Bins

要讲ptmalloc,不得不先讨论的,就是bins

在我之前的文章中,有提到过bins,但是并没有很详细的介绍

在glibc2.27中,除了之前(glibc2.25之前)所实现的fastbins、smallbins、largebins、unsortedbins之外,还实现了另一种机制:tcache

接下来我就一个个讲讲这几个bins

Tcache(bins)

实际上tcache是从glibc2.26开始实现的一种机制,只是Ubuntu18.04的发行版采用了glibc2.27,所以我这边就拿glbc2.27来说了

其优先级在内存分配上是最高的,设计tcache的初衷是为了进一步优化linux系统上内存分配的效率

其引入了两个重要的结构体: tcache_entry和tcache_perthread_struct

tcache_entry定义如下1

2

3

4

5

6/* We overlay this structure on the user-data portion of a chunk when

the chunk is stored in the per-thread cache. */

typedef struct tcache_entry

{

struct tcache_entry *next;

} tcache_entry;结构体

tcache_entry用于链接空闲的 chunk 结构体,其中的next指针指向下一个大小相同的 chunk。这里的

next指针与其他bins的fd以及bk不一样,其指向的是data区域,而不是堆块的header部分tcache_perthread_struct定义如下1

2

3

4

5

6

7

8

9

10

11

12

13

14/* There is one of these for each thread, which contains the

per-thread cache (hence "tcache_perthread_struct"). Keeping

overall size low is mildly important. Note that COUNTS and ENTRIES

are redundant (we could have just counted the linked list each

time), this is for performance reasons. */

typedef struct tcache_perthread_struct

{

char counts[TCACHE_MAX_BINS];

tcache_entry *entries[TCACHE_MAX_BINS];

} tcache_perthread_struct;

static __thread tcache_perthread_struct *tcache = NULL;每个 thread 都会维护一个

tcache_prethread_struct,它是整个tcache的管理结构,一共有TCACHE_MAX_BINS个计数器和TCACHE_MAX_BINS项tcache_entry,其中tcache_entry用单向链表的方式链接了相同大小的处于空闲状态(free 后)的 chunk,这一点上和fastbin很像。counts记录了tcache_entry链上空闲 chunk 的数目,每条链上最多可以有 7 个 chunk。

基本的tcache工作流程大致如下,详细的malloc工作流程将在后面介绍

- 第一次 malloc 时,会先 malloc 一块内存用来存放

tcache_prethread_struct - free 内存,且 size 小于 small bin size 时

- 在

tcache机制实现之前,系统会将堆块放到fastbins或者unsortedbins中 - 在

tcache机制实现之后则是 - 先放到对应的

tcache中,直到tcache被填满(默认是 7 个) tcache被填满之后,再次 free 的内存和之前一样被放到fastbin或者unsorted bin中tcache中的 chunk 不会合并(不取消inuse bit)

- 先放到对应的

- malloc 内存,且 size 在

tcache范围内 - 先从

tcache取 chunk,直到tcache为空 tcache为空后,从 bins中找tcache为空时,如果fastbins/smallbins中有size 符合的 chunk,会先取下此chunk,并尝试将此chunk大小对应的bin中剩余的相同大小的堆块放到tcache中直到填满,而如果unsortedbins中有size 符合的 chunk,那么就会直接将这个chunk放入tcache,并继续遍历unsortedbins,当循环达到一定的次数后,才会返回tcache中对应大小的堆块(下文详细讲malloc流程时会提到细节)

Fastbins

fastbins是在tcache机制实现之前,为了提高内存分配效率而出现的机制,其中每个bin都遵循LIFO策略

fastbins和tcache很像的一点在于都采用了单向链表进行管理

在64位系统上,fastbins默认拥有七个bin(实际代码中最多支持10个),大小从0x20-0x80

具体的分配、合并流程将在下文详细介绍

Smallbins

smallbins 中每个 chunk 的大小与其所在的 bin 的 index 的关系为:chunk_size = 2 * SIZE_SZ * index,具体如下

| 索引 | SIZE_SZ=4(32 位) | SIZE_SZ=8(64 位) |

|---|---|---|

| 2 | 16 | 32 |

| 3 | 24 | 48 |

| 4 | 32 | 64 |

| 5 | 40 | 80 |

| x | 2*4*x | 2*8*x |

| 63 | 504 | 1008 |

smallbins中一共有 62 个循环双向链表,每个链表中存储的 chunk 大小都一致。比如对于 32 位系统来说,下标 2 对应的双向链表中存储的 chunk 大小为均为 16 字节。每个链表都有链表头结点,这样可以方便对于链表内部结点的管理。此外,small bins 中每个 bin 对应的链表采用 FIFO 的规则

LargeBins

largebins中一共包括 63 个 bin,每个 bin 中的 chunk 的大小不一致,而是处于一定区间范围内。此外,这 63 个 bin 被分成了 6 组,每组 bin 中的 chunk 大小之间的公差一致,具体如下:

| 组 | 数量 | 公差 |

|---|---|---|

| 1 | 32 | 64B |

| 2 | 16 | 512B |

| 3 | 8 | 4096B |

| 4 | 4 | 32768B |

| 5 | 2 | 262144B |

| 6 | 1 | 无限制 |

以 32 位平台的 largebins为例,第一个 largebins 的起始 chunk 大小为 512 字节,位于第一组,所以该 bin 可以存储的 chunk 的大小范围为 [512,512+64)

UnsortedBins

unsortedbins 可以视为空闲 chunk 回归其所属 bin 之前的缓冲区

unsortedbins处于我们之前所说的 bin 数组下标 0、索引1 处。故而 unsortedbins 只有一个链表。unsortedbins 中的空闲 chunk 处于乱序状态,主要有两个来源

- 当一个较大的 chunk 被分割成两半后,如果剩下的部分大于 MINSIZE,就会被放到

unsortedbins中。 - 释放一个不属于

fastbins的 chunk,并且该 chunk 不和top_chunk紧邻时,该 chunk 会被首先放到unsortedbins中(紧邻时,会触发合并的操作,该chunk将会并入top_chunk)。

unsortedbins采用的顺序是FIFO

Index

前面在smallbins中提到了index,即索引

在这边要详细说一下ptmalloc中的索引,因为在ptmalloc中,索引与对应的bin相对于malloc_state中bins数组的起始地址的偏移(即数组下标)之间有着转换关系。

我们以大小为0x60的smallbins中的bin来举个例子

来看一下详细的代码

首先,索引的大小与bin中堆块的大小有关,由smallbin_index宏实现

1 | idx = smallbin_index (nb); |

通过计算,可以得出index为6

其实际数组下标的计算由bin_at宏实现

1 | typedef struct malloc_chunk *mbinptr; // mbinptr占据8个字节 |

可以算出实际的偏移应该是(m)->bins[((6) - 1) * 2]->(m)->bins[10]

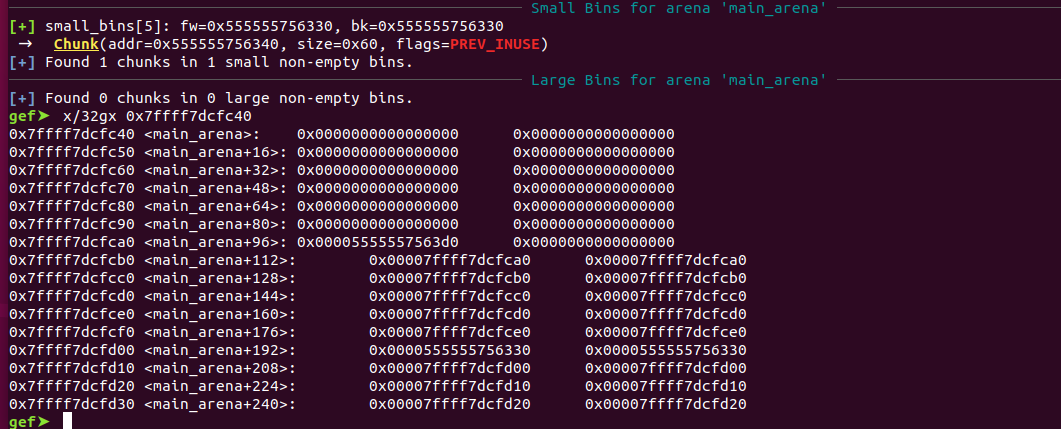

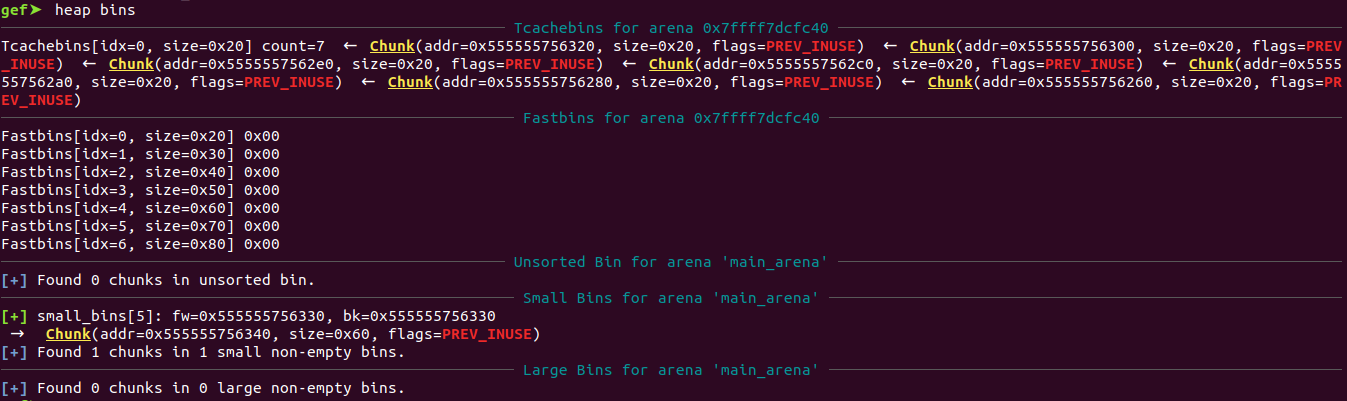

看下调试的情况

可以看到0x7ffff7dcfcb0处是malloc_state中的bins数组的起始地址,也同样是unsortedbins的地址

在0x7ffff7dcfd00处存储着大小为0x60的对应的smallbins的bin信息

那么实际上存储smallbins中存储堆块大小为0x60的bin的起始地址的地址距离malloc_state中的bins数组的起始地址的偏移距离为0x50,相当于是bin[10]

所以索引值并不等于其在数组中的下标(index=6,下标为10)

注:索引值只是逻辑意义上的一个值,切勿将其当作数组下标

1 | struct malloc_state |

Malloc

bins大致介绍完了,接着就来讲讲malloc的详细流程

__libc_malloc

实际上如果我们仔细看glbc的源代码,我们可以发现malloc函数并不存在,我们调用的malloc实际上是__libc_malloc

所以我们从这里开始

首先检查是否存在用户自定义的hook函数,如果存在,直接调用后返回

1

2

3

4

5

6

7

8

9

10void *

__libc_malloc (size_t bytes)

{

mstate ar_ptr;

void *victim;

void *(*hook) (size_t, const void *)

= atomic_forced_read (__malloc_hook);

if (__builtin_expect (hook != NULL, 0))

return (*hook)(bytes, RETURN_ADDRESS (0));之后检查

tcache是否存在恰好对应大小的堆块,如果存在,直接取对应大小的堆块并返回此堆块,结束malloc流程1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

/* int_free also calls request2size, be careful to not pad twice. */

size_t tbytes;

checked_request2size (bytes, tbytes);

size_t tc_idx = csize2tidx (tbytes);

MAYBE_INIT_TCACHE ();

DIAG_PUSH_NEEDS_COMMENT;

if (tc_idx < mp_.tcache_bins

/*&& tc_idx < TCACHE_MAX_BINS*/ /* to appease gcc */

&& tcache

&& tcache->entries[tc_idx] != NULL)

{

return tcache_get (tc_idx);

}

DIAG_POP_NEEDS_COMMENT;上面这步有个函数需要讲一下,就是

checked_request2size,这个函数主要是将用户所请求的内存大小换算成实际申请的内存大小1

2

3

4

5

6

7

8

9/* pad request bytes into a usable size -- internal version */

// MALLOC_ALIGN_MASK = 2 * SIZE_SZ - 1 = 15

// 这里只加一个SIZE_SZ的原因是由于下一个堆块的头部prev_size可以复用

// 所以原本需要的内存 = 用户申请的内存大小+chunk头(16字节)改为了

// 内存 = 用户申请的内存大小+SIZE_SZ(8字节)

(((req) + SIZE_SZ + MALLOC_ALIGN_MASK < MINSIZE) ? \

MINSIZE : \

((req) + SIZE_SZ + MALLOC_ALIGN_MASK) & ~MALLOC_ALIGN_MASK) // 保证实际申请的内存大小满足用户需求,并且16字节对齐如果不存在恰好大小的堆块,进入

_int_malloc函数1

2

3

4

5

6

7if (SINGLE_THREAD_P) // 判断是否是单线程

{

victim = _int_malloc (&main_arena, bytes);

assert (!victim || chunk_is_mmapped (mem2chunk (victim)) ||

&main_arena == arena_for_chunk (mem2chunk (victim)));

return victim;

}

_int_malloc

函数_int_malloc是malloc中最重要的一部分,实际上大部分功能都是在这个函数中实现的

在

_int_malloc函数中,先判断实际需要分配的堆块是否在fastbins的大小范围之内,如果属于,那么就检查fastbins中是否有大小正好相等的堆块,如果存在这样的堆块,那么先从fastbins对应的bin中取下此堆块,并将此bin中剩余的所有堆块全部放入相对应大小的tcache中,最后返回之前从bin中取下的堆块,结束malloc流程1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52if ((unsigned long) (nb) <= (unsigned long) (get_max_fast ())) // 判断是否属于fastbins

{

idx = fastbin_index (nb);

mfastbinptr *fb = &fastbin (av, idx);

mchunkptr pp;

victim = *fb; // 保存待分配的堆块至victim变量

if (victim != NULL) // 如果对应索引处bins不为空(即存在正好符合大小的堆块)

{

if (SINGLE_THREAD_P)

// 取下这个堆块

// (即将待分配堆块的fd覆盖对应bin的头节点值,使得对应的bin中第一个堆块跳过待分配的堆块,指向待分配的堆块的前一个堆块)

*fb = victim->fd;

else

REMOVE_FB (fb, pp, victim);

if (__glibc_likely (victim != NULL))

{

size_t victim_idx = fastbin_index (chunksize (victim));

if (__builtin_expect (victim_idx != idx, 0))

malloc_printerr ("malloc(): memory corruption (fast)");

check_remalloced_chunk (av, victim, nb);

// 将待分配堆块对应的bin中所有剩下的堆块填充至tcache对应大小的bin中

/* While we're here, if we see other chunks of the same size,

stash them in the tcache. */

size_t tc_idx = csize2tidx (nb);

if (tcache && tc_idx < mp_.tcache_bins)

{

mchunkptr tc_victim;

/* While bin not empty and tcache not full, copy chunks. */

while (tcache->counts[tc_idx] < mp_.tcache_count // mp_.tcache_count为7

&& (tc_victim = *fb) != NULL)

{

if (SINGLE_THREAD_P)

*fb = tc_victim->fd; // 继续取下堆块,循环将堆块放入tcache

else

{

REMOVE_FB (fb, pp, tc_victim);

if (__glibc_unlikely (tc_victim == NULL))

break;

}

tcache_put (tc_victim, tc_idx); // 放入tcache

}

}

void *p = chunk2mem (victim); // 转换成实际返回给用户的指针

alloc_perturb (p, bytes);

return p;

}

}

}如果

fastbins中没有符合大小的堆块,那么继续判断实际需要分配的堆块大小是否在smallbins的范围之内,如果在范围之内,那么就检查smallbins中是否存在恰好符合大小的堆块,如果存在,取下此堆块,且将此堆块对应的bin中剩余的堆块放入tcache中,最后返回取下的堆块,结束malloc流程1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57/*

If a small request, check regular bin. Since these "smallbins"

hold one size each, no searching within bins is necessary.

(For a large request, we need to wait until unsorted chunks are

processed to find best fit. But for small ones, fits are exact

anyway, so we can check now, which is faster.)

*/

if (in_smallbin_range (nb)) // 判断是否属于smallbins

{

idx = smallbin_index (nb);

bin = bin_at (av, idx);

if ((victim = last (bin)) != bin) // 当存在恰好符合大小的堆块

{

bck = victim->bk;

if (__glibc_unlikely (bck->fd != victim))

malloc_printerr ("malloc(): smallbin double linked list corrupted");

set_inuse_bit_at_offset (victim, nb);

bin->bk = bck; // 取下堆块

bck->fd = bin;

if (av != &main_arena)

set_non_main_arena (victim);

check_malloced_chunk (av, victim, nb);

// 与fastbin一致,将bin中剩余的堆块放入tcache

/* While we're here, if we see other chunks of the same size,

stash them in the tcache. */

size_t tc_idx = csize2tidx (nb);

if (tcache && tc_idx < mp_.tcache_bins)

{

mchunkptr tc_victim;

/* While bin not empty and tcache not full, copy chunks over. */

while (tcache->counts[tc_idx] < mp_.tcache_count

&& (tc_victim = last (bin)) != bin)

{

if (tc_victim != 0)

{

bck = tc_victim->bk;

set_inuse_bit_at_offset (tc_victim, nb);

if (av != &main_arena)

set_non_main_arena (tc_victim);

bin->bk = bck;

bck->fd = bin;

tcache_put (tc_victim, tc_idx);

}

}

}

void *p = chunk2mem (victim); // 转换为mem,返回给用户

alloc_perturb (p, bytes);

return p;

}

}当走到这一步时,说明实际需要分配的堆块有如下几种情况

- 实际需要分配的是

smallbins中的堆块,但是smallbins中没有恰好符合其大小的堆块可以被分配 - 实际需要分配的是

largebins中的堆块

- 实际需要分配的是

接着,判断申请的堆块是否属于

largebins,但是在取得对应的索引后,并没有直接去查找largebins中是否有对应大小的堆块可以被分配,而是执行了malloc_consolidate函数1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17/*

If this is a large request, consolidate fastbins before continuing.

While it might look excessive to kill all fastbins before

even seeing if there is space available, this avoids

fragmentation problems normally associated with fastbins.

Also, in practice, programs tend to have runs of either small or

large requests, but less often mixtures, so consolidation is not

invoked all that often in most programs. And the programs that

it is called frequently in otherwise tend to fragment.

*/

else

{

idx = largebin_index (nb);

if (atomic_load_relaxed (&av->have_fastchunks))

malloc_consolidate (av); // 整理fastbins

}malloc_consolidate函数旨在将所有fastbins中的堆块(无论大小多少),尝试将它们合并成大的堆块并放入unsortedbins中,而对无法合并的堆块,则也一起放入unsortedbins当中在整理完成并放入

unsortedbins后,malloc进入了一个大循环,这个大循环中有一个小循环将会将unsortedbins中的所有堆块一个接一个地取出来,并将其放入对应的bin中(small/largebins中的bin),详细步骤可看注释1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

INTERNAL_SIZE_T tcache_nb = 0;

size_t tc_idx = csize2tidx (nb); // nb为我们申请的堆块,这里假设申请的堆块在tcache范围内

if (tcache && tc_idx < mp_.tcache_bins) // 判断一下是否申请的堆块在tcache范围内

tcache_nb = nb; // 如果在tcache范围内,给tcache_nb赋值

int return_cached = 0; // 这是一个flag,记录是否有合适的堆块被放入了tcache中

tcache_unsorted_count = 0; // 计数器,记录处理了多少unsortedbins中的堆块

for (;; ) // 大循环

{

int iters = 0;

while ((victim = unsorted_chunks (av)->bk) != unsorted_chunks (av)) // 小循环,一个接一个判断

{

bck = victim->bk;

if (__builtin_expect (chunksize_nomask (victim) <= 2 * SIZE_SZ, 0)

|| __builtin_expect (chunksize_nomask (victim)

> av->system_mem, 0))

malloc_printerr ("malloc(): memory corruption");

size = chunksize (victim);

/*

If a small request, try to use last remainder if it is the

only chunk in unsorted bin. This helps promote locality for

runs of consecutive small requests. This is the only

exception to best-fit, and applies only when there is

no exact fit for a small chunk.

*/

// 如果我们申请的堆块是属于smallbins的,并且unsortedbins中有且仅有一个last_remainder

// 同时last_remainder被切割后剩余的大小仍然大于MINSIZE(32字节)

// 那么就尝试从last_remainder中切割一块下来进行分配

if (in_smallbin_range (nb) &&

bck == unsorted_chunks (av) &&

victim == av->last_remainder &&

(unsigned long) (size) > (unsigned long) (nb + MINSIZE))

{

/* split and reattach remainder */

// 切割last_remainder并将剩下的last_remainder重新放入unsortedbins

remainder_size = size - nb;

remainder = chunk_at_offset (victim, nb);

unsorted_chunks (av)->bk = unsorted_chunks (av)->fd = remainder;

av->last_remainder = remainder;

remainder->bk = remainder->fd = unsorted_chunks (av);

if (!in_smallbin_range (remainder_size))

{

remainder->fd_nextsize = NULL;

remainder->bk_nextsize = NULL;

}

set_head (victim, nb | PREV_INUSE |

(av != &main_arena ? NON_MAIN_ARENA : 0));

set_head (remainder, remainder_size | PREV_INUSE);

set_foot (remainder, remainder_size);

check_malloced_chunk (av, victim, nb);

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

// 从unsortedbins中取下一个堆块

/* remove from unsorted list */

unsorted_chunks (av)->bk = bck;

bck->fd = unsorted_chunks (av);

/* Take now instead of binning if exact fit */

// 如果取下的堆块大小恰好等于我们申请的堆块大小

if (size == nb)

{

set_inuse_bit_at_offset (victim, size);

if (av != &main_arena)

set_non_main_arena (victim);

/* Fill cache first, return to user only if cache fills.

We may return one of these chunks later. */

// 如果取下的堆块大小恰好等于我们申请的堆块大小

// 并且tcache对应大小的bin中没有被填满

// 那么就先将这个堆块放入tcache对应大小的bin中,暂时不返回给用户,将return_cached置1并继续循环

if (tcache_nb

&& tcache->counts[tc_idx] < mp_.tcache_count)

{

tcache_put (victim, tc_idx);

return_cached = 1;

continue;

}

else

{

// 在没有开启tcache机制的情况下,如果取下的堆块大小恰好等于我们申请的堆块大小,那么就直接将这个取下的堆块返回

check_malloced_chunk (av, victim, nb);

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

}

/* place chunk in bin */

// 如果取下的堆块大小并不恰好等于我们申请的堆块大小

// 那么根据其大小,放入不同的bin中(small/largebins中的bin)

if (in_smallbin_range (size))

{

victim_index = smallbin_index (size);

bck = bin_at (av, victim_index);

fwd = bck->fd;

}

else

{

victim_index = largebin_index (size);

bck = bin_at (av, victim_index);

fwd = bck->fd;

/* maintain large bins in sorted order */

if (fwd != bck)

{

/* Or with inuse bit to speed comparisons */

size |= PREV_INUSE;

/* if smaller than smallest, bypass loop below */

assert (chunk_main_arena (bck->bk));

if ((unsigned long) (size)

< (unsigned long) chunksize_nomask (bck->bk))

{

fwd = bck;

bck = bck->bk;

victim->fd_nextsize = fwd->fd;

victim->bk_nextsize = fwd->fd->bk_nextsize;

fwd->fd->bk_nextsize = victim->bk_nextsize->fd_nextsize = victim;

}

else

{

assert (chunk_main_arena (fwd));

while ((unsigned long) size < chunksize_nomask (fwd))

{

fwd = fwd->fd_nextsize;

assert (chunk_main_arena (fwd));

}

if ((unsigned long) size

== (unsigned long) chunksize_nomask (fwd))

/* Always insert in the second position. */

fwd = fwd->fd;

else

{

victim->fd_nextsize = fwd;

victim->bk_nextsize = fwd->bk_nextsize;

fwd->bk_nextsize = victim;

victim->bk_nextsize->fd_nextsize = victim;

}

bck = fwd->bk;

}

}

else

victim->fd_nextsize = victim->bk_nextsize = victim;

}

mark_bin (av, victim_index);

victim->bk = bck;

victim->fd = fwd;

fwd->bk = victim;

bck->fd = victim;

/* If we've processed as many chunks as we're allowed while

filling the cache, return one of the cached ones. */

// 计数处理了多少unsortedbins中的堆块

++tcache_unsorted_count;

// 如果return_cached为1,并且mp_.tcache_unsorted_limit大于0

// 同时,已经处理的堆块数达到了上限

// 那么就从tcache中取出一个之前放入的,对应大小的堆块返回给用户

if (return_cached

&& mp_.tcache_unsorted_limit > 0

&& tcache_unsorted_count > mp_.tcache_unsorted_limit)

{

return tcache_get (tc_idx);

}

// 最多迭代10000次后结束

if (++iters >= MAX_ITERS)

break;

}

// 防止明明已经找到了对应大小的堆块

// 但由于放入了tcache中并且由于处理的unsortedbins数量没有到阀值,导致没有返回合适堆块的情况

/* If all the small chunks we found ended up cached, return one now. */

if (return_cached)

{

return tcache_get (tc_idx);

}当走到这一步时,说明在整理了碎片后,依然无法从

unsortedbins中找到恰好符合实际需要分配的堆块大小的堆块,并且此时unsortedbins中应当被清空了,其中的堆块全被分配至了small/largebins中接着,程序判断实际需要分配的堆块是否是

largebins的范围1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72/*

If a large request, scan through the chunks of current bin in

sorted order to find smallest that fits. Use the skip list for this.

*/

if (!in_smallbin_range (nb))

{

bin = bin_at (av, idx);

/* skip scan if empty or largest chunk is too small */

// 如果对应的bin是空的,或者对应的bin中最大的堆块都比实际需要分配的要小,那么直接跳过下面的步骤

if ((victim = first (bin)) != bin

&& (unsigned long) chunksize_nomask (victim)

>= (unsigned long) (nb))

{

victim = victim->bk_nextsize;

while (((unsigned long) (size = chunksize (victim)) <

(unsigned long) (nb)))

victim = victim->bk_nextsize;

/* Avoid removing the first entry for a size so that the skip

list does not have to be rerouted. */

if (victim != last (bin)

&& chunksize_nomask (victim)

== chunksize_nomask (victim->fd))

victim = victim->fd;

remainder_size = size - nb;

// 取下堆块

unlink (av, victim, bck, fwd);

/* Exhaust */

// 如果取下的堆块大小减去实际需要分配的堆块大小小于MINSIZE,那么就不要切割了,直接将这个堆块返回

if (remainder_size < MINSIZE)

{

set_inuse_bit_at_offset (victim, size);

if (av != &main_arena)

set_non_main_arena (victim);

}

/* Split */

// 如果取下的堆块大小减去实际需要分配的堆块大小大于MINSIZE

// 那么切割堆块,并将剩下的部分放入unsortedbins中

else

{

remainder = chunk_at_offset (victim, nb);

/* We cannot assume the unsorted list is empty and therefore

have to perform a complete insert here. */

bck = unsorted_chunks (av);

fwd = bck->fd;

if (__glibc_unlikely (fwd->bk != bck))

malloc_printerr ("malloc(): corrupted unsorted chunks");

remainder->bk = bck;

remainder->fd = fwd;

bck->fd = remainder;

fwd->bk = remainder;

if (!in_smallbin_range (remainder_size))

{

remainder->fd_nextsize = NULL;

remainder->bk_nextsize = NULL;

}

set_head (victim, nb | PREV_INUSE |

(av != &main_arena ? NON_MAIN_ARENA : 0));

set_head (remainder, remainder_size | PREV_INUSE);

set_foot (remainder, remainder_size);

}

// 返回堆块

check_malloced_chunk (av, victim, nb);

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

}到这儿,说明实际需要分配的堆块在

smallbins、largebins、unsortedbins(整理碎片后的)中都没有正好符合大小的堆块可以被分配,那么,程序将尝试寻找一个大一些的堆块1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119/*

Search for a chunk by scanning bins, starting with next largest

bin. This search is strictly by best-fit; i.e., the smallest

(with ties going to approximately the least recently used) chunk

that fits is selected.

The bitmap avoids needing to check that most blocks are nonempty.

The particular case of skipping all bins during warm-up phases

when no chunks have been returned yet is faster than it might look.

*/

// 利用bitmap快速判断哪一些bin(small/largebins中的bin)中有堆块存在,防止浪费效率

++idx;

bin = bin_at (av, idx);

// 判断堆块属于哪一个block

block = idx2block (idx);

// 取出32位长的对应block的map值

map = av->binmap[block];

// 将idx转化为bit,准备与map值进行比较

bit = idx2bit (idx);

for (;; )

{

// 比较idx转化为的bit值是否大于map值,如果大于,说明此block中没有任何一个bin中拥有比实际需要分配的堆块更大的堆块

/* Skip rest of block if there are no more set bits in this block. */

if (bit > map || bit == 0)

{

do

{

// ++block,尝试查找下一个block

// 当不存在任何一个bin,其中的空闲堆块比实际需要分配的堆块要大,那么直接使用top_chunk(下面会讲)

if (++block >= BINMAPSIZE) /* out of bins */

goto use_top;

}

// 尝试获取下一个block对应的map值,判断其是否是0

// 如果是0,说明下一个block中所包含的任何一个bin中都没有空闲堆块

while ((map = av->binmap[block]) == 0);

// 如果存在bit<map的情况,那么说明存在空闲堆块比实际需要分配的堆块要大

// 并且此堆块所在的bin一定在此block所包含的bin中

// 那么,将bin定位至此block中的第一个bin的位置

bin = bin_at (av, (block << BINMAPSHIFT));

bit = 1;

}

// 从此block中的第一个bin开始查找,直到找到那个存在空闲堆块的bin

/* Advance to bin with set bit. There must be one. */

while ((bit & map) == 0)

{

bin = next_bin (bin);

bit <<= 1; // bit作为计数器,每找一个左移一位,标记了此时检查到的bin(和binmap原理一样)

assert (bit != 0); // bit作为int,4字节长,如果左移至溢出,说明出现了问题,并没有找到对应的bin(这是不合理的,因为上面的程序保证了一定会找到)

}

// 找到对应的bin后,取出bin中的第一个堆块

/* Inspect the bin. It is likely to be non-empty */

victim = last (bin);

// 检查是否是误报,因为binmap的值不是实时更新的,可能对应位的值是假的1

/* If a false alarm (empty bin), clear the bit. */

if (victim == bin)

{

av->binmap[block] = map &= ~bit; /* Write through */

bin = next_bin (bin);

bit <<= 1;

}

else

{

// 分配这个bin中的堆块

size = chunksize (victim);

// 取第一个就可以,因为第一个就已经足够大

/* We know the first chunk in this bin is big enough to use. */

assert ((unsigned long) (size) >= (unsigned long) (nb));

remainder_size = size - nb;

// 取下堆块

/* unlink */

unlink (av, victim, bck, fwd);

// 下面的步骤和上面分配largebins一样了,不再细说

/* Exhaust */

if (remainder_size < MINSIZE)

{

set_inuse_bit_at_offset (victim, size);

if (av != &main_arena)

set_non_main_arena (victim);

}

/* Split */

else

{

remainder = chunk_at_offset (victim, nb);

/* We cannot assume the unsorted list is empty and therefore

have to perform a complete insert here. */

bck = unsorted_chunks (av);

fwd = bck->fd;

if (__glibc_unlikely (fwd->bk != bck))

malloc_printerr ("malloc(): corrupted unsorted chunks 2");

remainder->bk = bck;

remainder->fd = fwd;

bck->fd = remainder;

fwd->bk = remainder;

/* advertise as last remainder */

if (in_smallbin_range (nb))

av->last_remainder = remainder;

if (!in_smallbin_range (remainder_size))

{

remainder->fd_nextsize = NULL;

remainder->bk_nextsize = NULL;

}

set_head (victim, nb | PREV_INUSE |

(av != &main_arena ? NON_MAIN_ARENA : 0));

set_head (remainder, remainder_size | PREV_INUSE);

set_foot (remainder, remainder_size);

}

check_malloced_chunk (av, victim, nb);

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

}如果尝试寻找一个较大的堆块也失败了,那么就只能使用

top_chunk了1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64use_top:

/*

If large enough, split off the chunk bordering the end of memory

(held in av->top). Note that this is in accord with the best-fit

search rule. In effect, av->top is treated as larger (and thus

less well fitting) than any other available chunk since it can

be extended to be as large as necessary (up to system

limitations).

We require that av->top always exists (i.e., has size >=

MINSIZE) after initialization, so if it would otherwise be

exhausted by current request, it is replenished. (The main

reason for ensuring it exists is that we may need MINSIZE space

to put in fenceposts in sysmalloc.)

*/

victim = av->top;

size = chunksize (victim);

// 如果topchunk被切割后,剩下的大小大于等于MINSIZE,那么就切割topchunk

if ((unsigned long) (size) >= (unsigned long) (nb + MINSIZE))

{

remainder_size = size - nb;

remainder = chunk_at_offset (victim, nb);

// 更新malloc_state中的topchunk的地址

av->top = remainder;

set_head (victim, nb | PREV_INUSE |

(av != &main_arena ? NON_MAIN_ARENA : 0));

set_head (remainder, remainder_size | PREV_INUSE);

check_malloced_chunk (av, victim, nb);

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

// 如果发现topchunk被切割后,剩下的大小小于MINSIZE

// 并且此时fastbins中存在堆块,那么调用malloc_consolidate

// 合并fastbins中的堆块并将其放入unsortedbins中,再尝试一次大循环

// 这么做的原因是防止实际上申请的是一个small chunk

// 但是由于topchunk、small/largebins中都没有恰好/更大的堆块了,从而导致无法分配

// (这里调用malloc_consolidate是由于如果实际需要分配的是small chunk,那么在之前的步骤中是不会触发malloc_consolidate的)

/* When we are using atomic ops to free fast chunks we can get

here for all block sizes. */

else if (atomic_load_relaxed (&av->have_fastchunks))

{

malloc_consolidate (av);

/* restore original bin index */

if (in_smallbin_range (nb))

idx = smallbin_index (nb);

else

idx = largebin_index (nb);

}

// 到这儿,说明内存已经完全不够用了,那么就调用sysmalloc,向内核申请更多的内存

/*

Otherwise, relay to handle system-dependent cases

*/

else

{

void *p = sysmalloc (nb, av);

if (p != NULL)

alloc_perturb (p, bytes);

return p;

}

}

Binmap

这里要插一个知识点,也就是在大循环查找较大的堆块的过程中,有提到一个结构:binmap,这里简单介绍一下这个结构

如果仔细看malloc_state的构成,相信大家已经找到了这个binmap

1 | /* Normal bins packed as described above */ |

那么这个binmap主要是用来做什么呢?

实际上binmap的主要作用,就是用来标示当前一个bin中是否存在空闲的堆块,这种结构可以帮助我们在malloc查找较大堆块的时候快速分辨 所有的bin中哪些存在堆块,而不用去遍历每一个bin,节省了时间

这里有一点要注意,binmap的位计数从0开始,一共有128位(0-127),但是实际使用到的位只有125位

- unsortedbins中堆块的变化不会被记录到binmap中,也即binmap的第1位恒为0

- 第0位以及第127位不被使用,恒为0

- 所有的(small/largebins)中的bin中是否存在堆块(0 or 1),被记录在binmap的第x位(x为bin的索引值)

与binmap有关的宏如下

1 | /* |

可以看到BINMAPSIZE这个宏,这个宏计算后实际上是4,这代表了binmap将所有的bin分成了4块,相当于binmap[4],每个数组元素为32位长,每一位都代表了对应索引的bin中是否存在空闲堆块(0=不存在,1=存在)

而之前我们用到的idx2block这个宏,就是用来根据bin的索引idx来得到其属于哪一个block的

宏idx2bit则是用来通过bin的索引得到bit值,用来与bin所属的block的map值来进行比较,如果bit值大于map值,那么就说明此block中不存在任何一个bin拥有比实际需要分配的堆块更大的空闲堆块

看个例子

可以看到,此时smallbins中大小为0x60的bin中存在空闲堆块

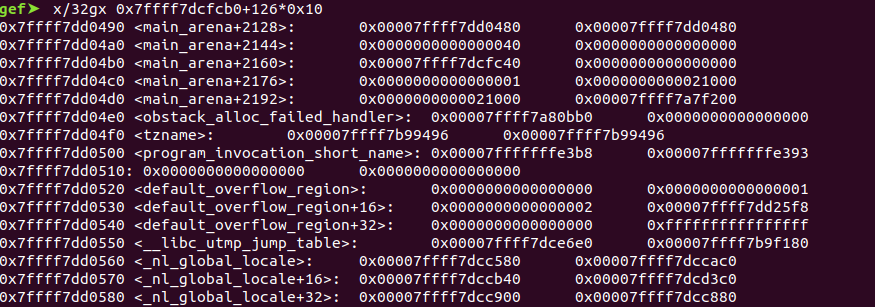

此时binmap的值

可以看到binmap位于0x7ffff7dd04a0-0x7ffff7dd04b0

用上面的宏计算一下0x60的堆块应当处于哪一个block,顺便计算一下bit的值:

1 | idx = 0x60<<4 = 6 |

我们先根据block的值,得到对应的map值

因为block为0,那么就说明map的值是binmap[0]的值,此时为0x00000040

可以注意到,0x40其实是01000000,第7位是1,这代表了”索引为6处(从0开始)的bin中存在空闲堆块”

这样再看之前寻找更大堆块的逻辑,就很好理解了

1 | ++idx; |

至于为何说binmap不是实时的,仔细看下注释和代码就能理解了

1 | /* |

sysmalloc

根据上面的流程,当内存不足时,程序就需要调用sysmalloc,向内核申请更多的内存

其中申请内存的方法分为两种,mmap和brk

mmap

当满足如下条件时,sysmalloc会调用mmap来扩展内存

- 系统支持mmap

- 实际需要分配的堆块大小大于

mmap threshold,这个值在程序中为128*1024=128kb - 已经mmap的区域个数需要小于其上限

mp_.n_mmaps_max,这个值在程序中为65536

相关的宏和代码如下所示

1 | DEFAULT_MXFAST 64 (for 32bit), 128 (for 64bit) |

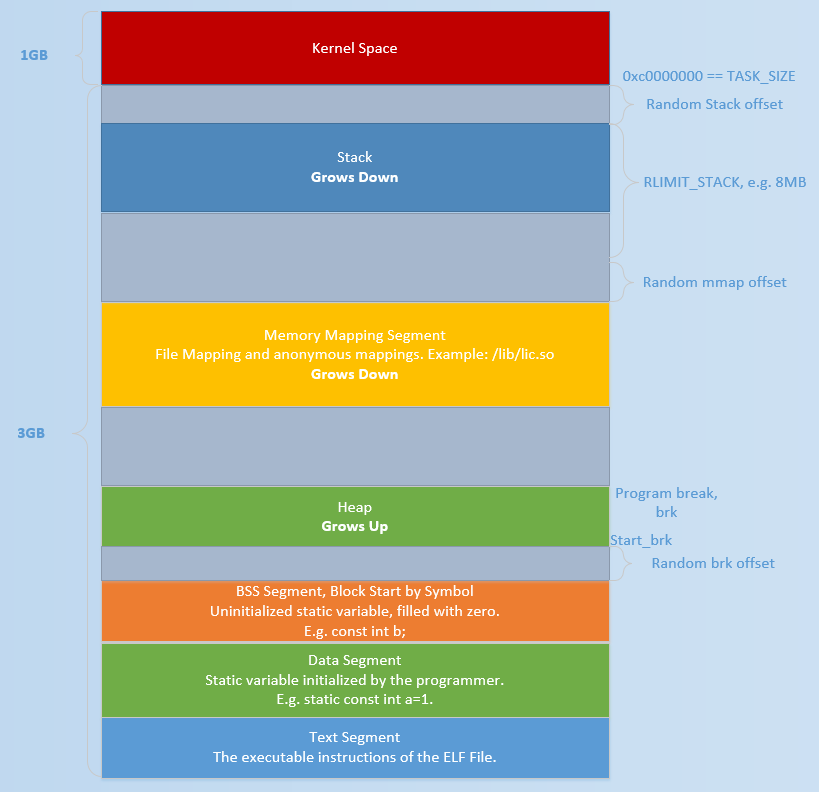

brk

当不满足mmap的条件时,那么程序就会尝试使用brk,尝试通过抬高brk的方式来拓展堆内存

当程序刚开始运行时堆的起始地址start_brk以及堆的当前末尾brk指向同一地址。根据是否开启 ASLR,两者的具体位置会有所不同

- 不开启 ASLR 保护时,

start_brk以及brk会指向data/bss段的结尾。 - 开启 ASLR 保护时,

start_brk以及brk也会指向同一位置,只是这个位置是在data/bss段结尾后的随机偏移处。

如下图

具体细节暂时不是重点,可以参看ctf-wiki上的大致介绍 https://ctf-wiki.github.io/ctf-wiki/pwn/linux/glibc-heap/implementation/malloc-zh/#sysmalloc

Free

讲讲free的流程

__libc_free

和malloc一样,实际上我们调用free也不是调用free函数,而是调用了__libc_free函数

当我们free掉一个堆块时,步骤如下

首先和malloc一样,先检查是否存在用户自定义的hook函数,如果存在,调用hook函数后返回

1 | void |

检查mem指针是否是空指针,如果是空指针,直接返回

1 | if (mem == 0) /* free(0) has no effect */ |

检查需要释放的堆块是否是mmap出来的,如果是,调用munmap_chunk函数释放

1 | if (chunk_is_mmapped (p)) /* release mmapped memory. */ |

不是的话,和malloc一样,调用_int_free函数

_int_free

首先_int_free函数判断指针是否非法

1 | static void |

如果使用了tcache,判断堆块大小是否在tcache范围内,如果在范围内,则放入tcache

1 |

|

如果没放入tcache,考虑fastbins

1 | /* |

如果不放入fastbins,那么考虑合并堆块

1 | /* |

malloc_consolidate

最后讲讲经常提到的malloc_consolidate函数

1 | /* |